In my mind, the heart of Spawning Tool is the extracted, readable build orders. To get to that point, however, there are a lot of replays to sort through, and I think that’s where the tagging system becomes valuable. Many of the tags are auto-generated from replay data or extrapolated from past data. The biggest area still requiring human analysis, however, is labeling build orders, which, judging from the front page, hasn’t been well-distributed in the community.

And I admit that the experience so far sucked. You had to find a replay on your own, and it was at least 4 or 5 clicks to punch in a build order. Hopefully, however, it’s a lot easier now with the new system for labeling build orders. There are a few parts to this.

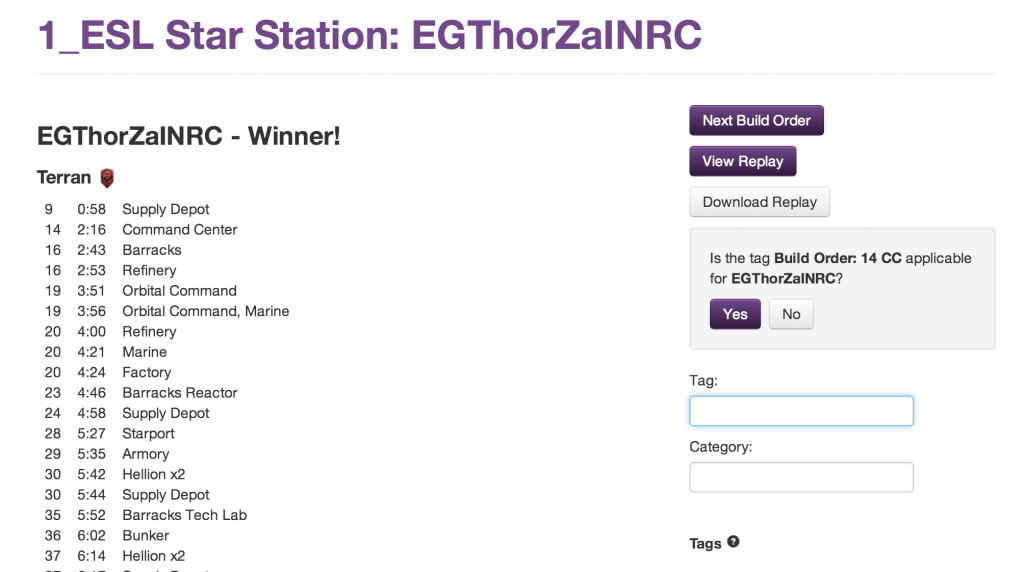

First, you can now approve and reject suggested tags with one click. Previously, it was hard to know what taxonomy of build orders was, and it took too many steps. Now, there are a few thousand procedurally-generated suggested tags for you to approve. You can take a look at the build order and hit “yes” or “no” to determine whether the tag is appropriate or not.

Second, the interface to tag replays is up top on this page. Previously, it was hidden at the bottom of the page on the sidebar and took a click to open up. Now, you’re automatically focused into the box so you can add tags immediately on page load without having to click or scroll anywhere. Hopefully, you can pair up the action of approving or rejecting a suggested tag with more detailed tags on top of that.

Finally, you now see isolated build orders and can browse replay-to-replay. Previously, you had to bounce back and forth from browse (or open 10 tabs at once like me) to tag several replays in a row. In the build order labeling pages, you can jump from random build to build and stay on a roll.

The link to the build order labeler is on the front page, so you can hop straight into that and check out the world of actual in-game builds. Remember to login as well so that your tags are associated with you and counted in the leaderboard.

One more thing: my hope is that labeling build orders can become more and more automatic (though still with some human intervention). Machine learning is in the works and can generate suggestions, but it will only improve with more hand-labeled training data. I’m not sure where the tipping point is, but I’m excited to get to a point where that can take off on its own!