I have to admit, my interest in StarCraft has waned over the past few months. I haven’t watched much StarCraft this year. /r/starcraft hasn’t been as interesting to me. Even my weekly games with friends has switched to StarCraft every other week with a different game in the off-weeks. I think the biggest problem is that the game has just gotten kind of stale for me since I don’t play very seriously.

But at BlizzCon this weekend, Blizzard showed off the changes to multiplayer in Legacy of the Void (LotV). And now I’m really excited again.

https://www.youtube.com/playlist?list=PL4i11hPX5tNBXRY30dLU30xknsrmy6-Ex

For a good summary of all specific changes, see the TeamLiquid thread. To see a show match with the new units, here’s Stardust and jjakji playing against MC and HyuN.

People much more qualified than myself have done a lot of unit analysis, so I’m going to take a different approach and offer my thoughts across a few topics. Instead of a long post here, I’m going to write a series of blog posts on different topics, each of which are roughly summarized below.

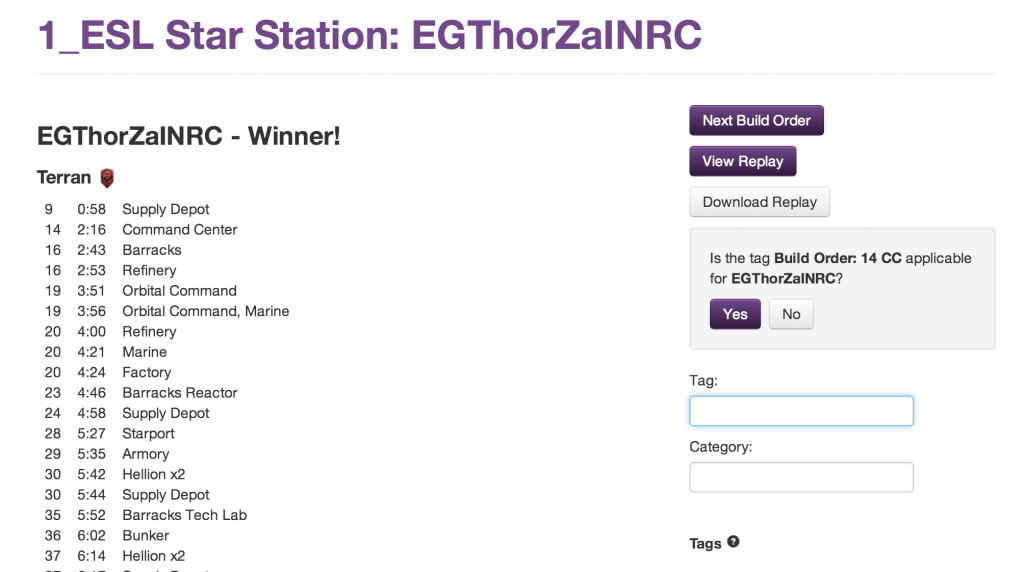

As a guy whose blog and website are dedicated to build orders, the biggest change might be starting at 12 workers instead of 6. This change should instantly void every build order that exists, which might actually be an awesome way to reset.

Diversity is really interesting

Blizzard is very careful to make sure that all units have a place in the game. The new units like the Ravager, Disruptor, and Cyclone look awesome, but regardless of the details, I think having more options alone will make the game much more enduring.

Micro your hearts out (part 1, part 2)

Although we appreciate drops, players of all level tend towards deathball strategies with large numbers of similar units attacking together. Blizzard has made a lot of changes to emphasize the abilities of individual units to make combat broader and more dynamic.

Team games are probably dead, but that’s okay

These days, I play a lot of 3v3 and 4v4 with my friends of varying skill levels. Blizzard’s changes to early game and micro could be a deathblow to this format. To compensate, they’re adding new modes that I think people will like a lot.

Let’s be supportive during beta

I think a lot of people are very excited right now about the changes. Because of Blizzard’s development policy of refine, things are never going to be more exciting than right now. Let’s figure out how we can keep that energy in the coming months.

Keep an eye out for upcoming posts!

What 3 changes are you most excited about in Legacy of the Void?

- Increased starting workers from 6 to 12 (22%, 11 Votes)

- Archon mode (16%, 8 Votes)

- New Terran units (Cyclone, Herc) (16%, 8 Votes)

- Automated tournaments (12%, 6 Votes)

- Single-player campaign (10%, 5 Votes)

- "New" Zerg Units (Lurker, Ravager) (8%, 4 Votes)

- Alllied commanders (6%, 3 Votes)

- Existing Terran tweaks (Battlecruiser, Siege Tank, etc) (6%, 3 Votes)

- Existing Protoss tweaks (Carriers, Oracle, etc) (2%, 1 Votes)

- Existing Zerg Tweaks (Nydus Worm, Swarm Hosts, etc) (2%, 1 Votes)

- New Protoss units (Disruptor) (0%, 0 Votes)

Total Voters: 20